How fruit flies achieve accurate visual behavior despite changing light conditions

Researchers identify neuronal networks and mechanisms that show how contrasts can be rapidly and reliably perceived even when light levels vary

When light conditions rapidly change, our eyes have to respond to this change in fractions of a second to maintain stable visual processing. This is necessary when, for example, we drive through a forest and thus move through alternating stretches of shadows and clear sunlight. "In situations like these, it is not enough for the photoreceptors to adapt, but an additional corrective mechanism is required," said Professor Marion Silies of Johannes Gutenberg University Mainz (JGU). "Earlier work undertaken by her research group had already demonstrated that such a corrective 'gain control' mechanism exists in the fruit fly Drosophila melanogaster, where it acts directly downstream of the photoreceptors. Silies' team has now managed to identify the algorithms, mechanisms, and neuronal networks that enable the fly to sustain stable visual processing when light levels change rapidly. The corresponding article has been published recently in Nature Communications.

Rapid changes in luminance challenge stable visual processing

Our vision needs to function accurately in many different situations – when we move in our surroundings as well as when our eyes follow an object that moves from light into shade. This applies to us humans and to many thousand animal species that rely heavily on vision to navigate. Rapid changes in luminance are also a problem in the world of inanimate objects when it comes to information processing by, for example, camera-based navigation systems. Hence, many self-driving cars depend on additional radar- or lidar-based technology to properly compute the contrast of an object relative to its background. "Animals are capable of doing this without such technology. Therefore, we decided to see what we could learn from animals about how visual information is stably processed under constantly changing lighting conditions," explained Marion Silies the research question.

Combination of theoretical and experimental approaches

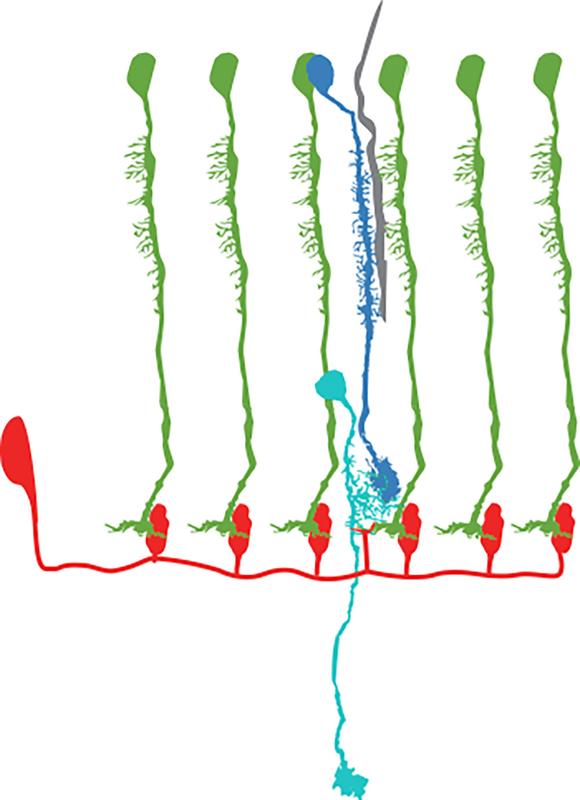

The compound eye of Drosophila melanogaster consists of 800 individual units or ommatidia. The contrast between an object and its background is determined postsynaptic of the photoreceptors. However, if luminance conditions suddenly change, as in the case of an object moving into the shadow of a tree, there will be differences in contrast responses. Without gain control, this would have consequences for all subsequent stages of visual processing, resulting in the object appearing different. The recent study with lead author Dr. Burak Gür used two-photon microscopy to describe where in visual circuitry stable contrast responses were first generated. This led to the identification of neuronal cell types that are positioned two synapses behind the photoreceptors.

These cell types respond only very locally to visual information. For the background luminance to be correctly included in computing contrast, this information needs narrow spatial pooling, as revealed by a computational model implemented by co-author Dr. Luisa Ramirez. "We started with a theoretical approach that predicted an optimal radius in images of natural environments to capture the background luminance across a particular region in visual space while, in parallel, we were searching for a cell type that had the functional properties to achieve this," said Marion Silies, head of the Neural Circuits lab at the JGU Institute of Developmental Biology and Neurobiology (IDN).

Information on luminance is spatially pooled

The Mainz-based team of neuroscientists has identified a cell type that meets all required criteria. These cells, designated Dm12, pool luminance signals over a specific radius, which in turn corrects the contrast response between the object and its background in rapidly changing light conditions. "We have discovered the algorithms, circuits, and molecular mechanisms that stabilize vision even when rapid luminance changes occur," summarized Silies, who has been investigating the visual system of the fruit fly over the past 15 years. She predicts that luminance gain control in mammals, including humans, is implemented in a similar manner, particularly as the necessary neuronal substrate is available.

The work for the research paper "Neural pathways and computations that achieve stable contrast processing tuned to natural scenes" was supported by an ERC Starting Grant awarded to Professor Marion Silies as well as funds of the Collaborative Research Center 1080 on Molecular and Cellular Mechanisms of Neural Homeostasis and of the Research Unit 5289, RobustCircuit, both financed by the German Research Foundation (DFG).

Images:

https://download.uni-mainz.de/presse/10_idn_visuelles_system_stabilitaet_01.jpg

In the network illustrated here, the information on luminance captured by L3 cells (green) is pooled by Dm neurons (red) in order to correct the signals provided by L2 cells (blue). Tm9 cells (cyan) bring this information together in order to generate stable contrasts. (ill./©: Marion Silies)

https://download.uni-mainz.de/presse/10_idn_visuelles_system_stabilitaet_02.jpg

Cell phone cameras adjust the brightness of a scene using a single value, which explains why the sky is overexposed in the top image and the ground is underexposed in the lower image. The eyes of flies and humans, on the other hand, can generate stable responses to contrast even when there are changes to background luminance. (photo/©: Marion Silies)

Related links:

• https://ncl-idn.biologie.uni-mainz.de/ – Neural Circuits Lab at the JGU Institute of Developmental Biology and Neurobiology

• https://idn.biologie.uni-mainz.de/ – Institute of Developmental Biology and Neurobiology (IDN) at the JGU Faculty of Biology

• https://www.blogs.uni-mainz.de/fb10-biologie-eng/ – Faculty of Biology at JGU

• https://robustcircuit.flygen.org/ – Research Unit 5289 "From Imprecision to Robustness in Neural Circuit Assembly" (RobustCircuit), funded by the German Research Foundation

• https://www.crc1080.com/ – Collaborative Research Center 1080 "Molecular and Cellular Mechanisms in Neural Homeostasis", funded by the German Research Foundation

Read more:

• https://press.uni-mainz.de/neurons-in-the-visual-system-of-flies-exhibit-surprisingly-heterogeneous-wiring/ – press release "Neurons in the visual system of flies exhibit surprisingly heterogeneous wiring" (3 June 2024)

• https://press.uni-mainz.de/franco-german-research-funding-in-the-field-of-biology/ – press release "Franco-German research funding in the field of biology" (8 Jan. 2024)

• https://press.uni-mainz.de/local-motion-detectors-in-fruit-flies-sense-complex-patterns-generated-by-their-own-motion/ – press release "Local motion detectors in fruit flies sense complex patterns generated by their own motion" (5 Apr. 2022)

• https://press.uni-mainz.de/marion-silies-receives-erc-consolidator-grant-for-research-on-adaptive-functions-of-visual-systems/ – press release "Marion Silies receives ERC Consolidator Grant for research on adaptive functions of visual systems" (4 Apr. 2022)

• https://press.uni-mainz.de/fruit-flies-respond-to-rapid-changes-in-the-visual-environment-thanks-to-luminance-sensitive-lamina-neurons/ – press release "Fruit flies respond to rapid changes in the visual environment thanks to luminance-sensitive lamina neurons" (5 Feb. 2020)

• https://www.magazine.uni-mainz.de/how-flies-and-humans-see-the-world/ – JGU Magazine: "How flies and humans see the world" (14 Jan. 2020)

Wissenschaftlicher Ansprechpartner:

Professor Dr. Marion Silies

Institute of Developmental Biology and Neurobiology (IDN)

Johannes Gutenberg University Mainz

55099 Mainz, GERMANY

phone: +49 6131 39-28966

e-mail: msilies@uni-mainz.de

https://idn.biologie.uni-mainz.de/prof-dr-marion-silies/

Originalpublikation:

B. Gür et al., Neural pathways and computations that achieve stable contrast processing tuned to natural scenes, Nature Communications, 3 October 2024,

DOI: 10.1038/s41467-024-52724-5

https://www.nature.com/articles/s41467-024-52724-5

Ähnliche Pressemitteilungen im idw