Hard in theory, easy in practice | ISTA researchers investigate why graph isomorphism algorithms seem to be so effective

Graphs are everywhere. In discrete mathematics, they are structures that show the connections between points, much like a public transportation network. Mathematicians have long sought to develop algorithms that can compare any two graphs. In practice, many algorithms seem always to work efficiently. But in theory, there is no guarantee. In a new arXiv preprint, researchers from the Kwan Group at the Institute of Science and Technology Austria (ISTA) develop a method to understand why.

The difficulty of some mathematical problems lies in not knowing how hard they are. This is the case with an important problem in computer science called “graph isomorphism testing” whereby scientists use algorithms to test whether two graphs are the same. In theory, it cannot be ruled out that the algorithms might run for longer than the age of the universe. But in practice, many algorithms seem to work just fine. Almost always. Why do these algorithms seem to be so effective?

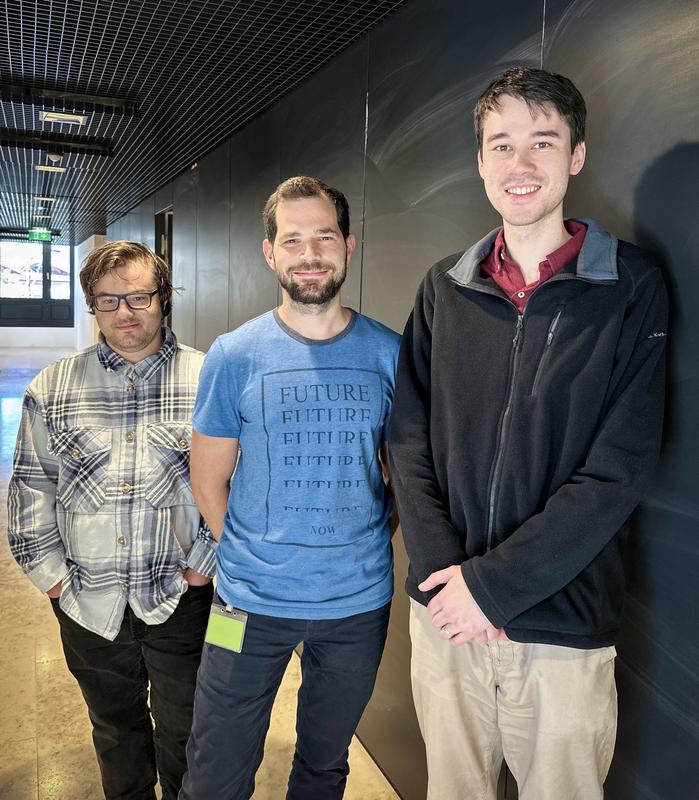

Institute of Science and Technology Austria (ISTA) postdocs Michael Anastos and Benjamin Moore and Assistant Professor Matthew Kwan have tackled this question in their new preprint. “The complexity of the graph isomorphism problem is one of the most intriguing questions in computer science,” says Anastos. Kwan adds, “The graph isomorphism problem does not seem hard, but we somehow can’t yet prove that it’s easy.”

The complexity of modeling networks

Graphs are used to model a wide variety of systems, such as social networks, communication pathways, computer networks, and others. This also applies to chemical compounds. “Chemists use graph isomorphism testing algorithms to compare molecules and build databases of chemicals,” says Kwan. This allows them to check the novelty of their newly synthesized compounds. But graphs, unlike geometric shapes with specific angles and dimensions, represent networks. Thus, they can be extremely complex and their nodes—points of connection—can be positioned freely, which renders them visually unrecognizable. Comparing such complex graphs under all possible conditions has puzzled mathematicians.

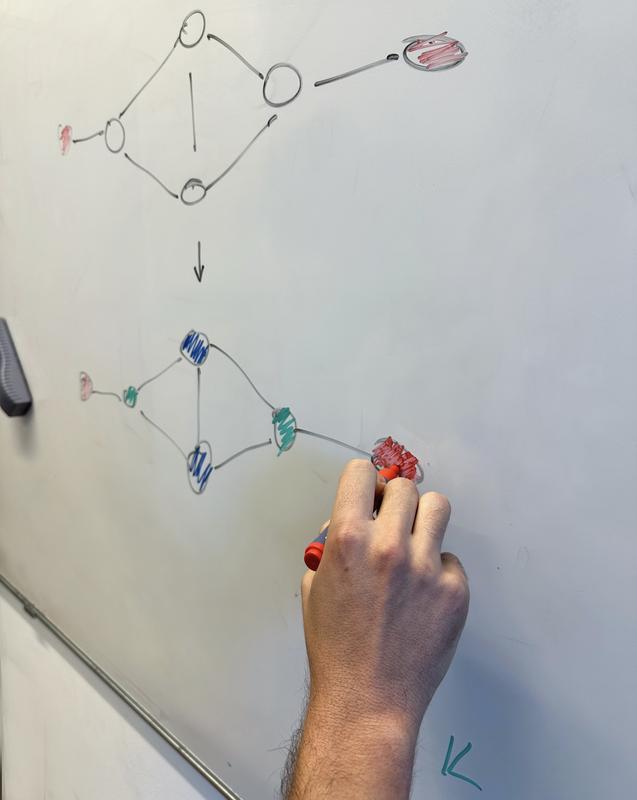

Refining with colors

Mathematicians have developed various strategies to compare graphs. Since the 1970s, algorithms have been able to test graph isomorphism, but in exponential time. This means that the increasing complexity of the graphs increased the algorithm’s running time disproportionately and slowed it down considerably. In 2015, the computer scientist and mathematician László Babai made a breakthrough by proposing an algorithm that runs in “quasi-polynomial time”. This comes quite close to the “polynomial-time” benchmark that separates efficient algorithms from inefficient ones. But already in 1980, another classical theorem—the Babai-Erdős-Selkow theorem—showed that almost all graphs can be relabeled to make easy isomorphism testing possible. This is due to a type of refinement called “color refinement”, whereby the algorithm studies the connections of each node in the graph and assigns it a color. The different colors thus assigned allow the algorithm to easily identify how many other vertices a particular node ‘sees’.

This approach facilitates the comparison across a large pool of graphs. “Algorithms based on color refinement seem to work in most cases in practice. But how can this be the case when the theory says it can take exponential time?” asks Anastos. “Our work does not aim to design algorithms optimized for superior worst-case running guarantees. Instead, we aim to give theoretical justification and provide insight for why algorithms based on color refinement seem so effective.”

Random perturbations and smoothed analysis

To better understand why color refinement seems to do the trick—at least in most cases in practice—Anastos, Kwan, and Moore combined it with another concept called “smoothed analysis”. Introduced in the early 2000s by computer scientists Daniel Spielman and Shang-Hua Teng, this is a general paradigm to bridge the gap between the average-case and worst-case performance of algorithms. Smoothed analysis won Spielman and Teng the Gödel Prize in 2008, an annual prize for outstanding papers in theoretical computer science.

Put simply, smoothed analysis introduces small random perturbations to the connections in a graph rather than focusing purely on worst-case scenarios. “If an algorithm performs well after randomly perturbing any input, this tells us that the bad inputs are ‘rare’, ‘fragile’, or ‘unstable’, which gives some justification for why we don’t tend to see them in practice,” explains Kwan. “No matter what graph we start with, after randomly flipping some edges or connections between the nodes, we show that algorithms based on color refinement become very efficient.” This explains why algorithms based on color refinement do not tend to ‘get stuck’ in exponential running time in practice.

From a puzzling problem to “no problem”?

Away from finding a magical algorithm that can compare any graph, even in the worst-case scenario, the ISTA researchers sought to understand the philosophy of why certain algorithms seem to work in practice. Thus, they aimed to make mathematical sense of a computationally difficult problem. By combining color refinement with smoothed analysis, Anastos and his colleagues showed that the graphs that cause problems for existing algorithms have an extremely fragile structure. As soon as they deviated from this structure, the algorithms performed much more efficiently. “We are coming one step closer to proving that the graph isomorphism problem is, in fact, easy to solve,” concludes Anastos.

-

Funding information

This project was supported by funding from the European Research Council ERC Starting Grant “RANDSTRUCT” No. 101076777, the Austrian Science Fund (FWF) grant 10.55776/ESP3863424, and the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No. 101034413.

Originalpublikation:

Michael Anastos, Matthew Kwan & Benjamin Moore. 2024. Smoothed analysis for graph isomorphism. arXiv. DOI: https://doi.org/10.48550/arXiv.2410.06095

Weitere Informationen:

https://ista.ac.at/en/research/kwan-group/ Kwan Research Group at ISTA

Die semantisch ähnlichsten Pressemitteilungen im idw